|

|

| 31 July 2013 |

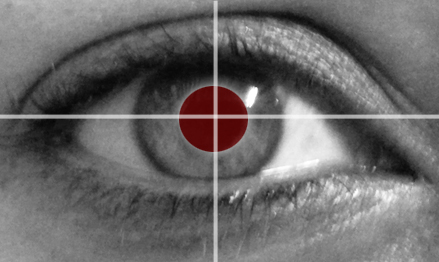

Keeping an eye on empowerment

"If we can see what you see, we can think what you think."

Eye-tracking used to be one of those fabulous science-fiction inventions, along with Superman-like bionic ability. Could you really use the movement of your eyes to read people's minds? Or drive your car? Or transfix your enemy with a laser-beam?

Well, actually, yes, you can (apart, perhaps, from the laser beam… ). An eye tracker is not something from science fiction; it actually exists, and is widely used around the world for a number of purposes.

Simply put, an eye tracker is a device for measuring eye positions and eye movement. Its most obvious use is in marketing, to find out what people are looking at (when they see an advertisement, for instance, or when they are wandering along a supermarket aisle). The eye tracker measures where people look first, what attracts their attention, and what they look at the longest. It is used extensively in developed countries to predict consumer behaviour, based on what – literally – catches the eye.

On a more serious level, psychologists, therapists and educators can also use this device for a number of applications, such as analysis and education. And – most excitingly – eye tracking can be used by disabled people to use a computer and thereby operate a number of devices and machines. Impaired or disabled people can use eye tracking to get a whole new lease on life.

In South Africa and other developing countries, however, eye tracking is not widely used. Even though off-the-shelf webcams and open-source software can be obtained extremely cheaply, they are complex to use and the quality cannot be guaranteed. Specialist high-quality eye-tracking devices have to be imported, and they are extremely expensive – or rather – they used to be. Not anymore.

The Department of Computer Science and Informatics (CSI) at the University of the Free State has succeeded in developing a high-quality eye tracker at a fraction of the cost of the imported devices. Along with the hardware, the department has also developed specialised software for a number of applications. These would be useful for graphic designers, marketers, analysts, cognitive psychologists, language specialists, ophthalmologists, radiographers, occupational and speech therapists, and people with disabilities. In the not-too-distant future, even fleet owners and drivers would be able to use this technology.

"The research team at CSI has many years of eye-tracking experience," says team leader Prof Pieter Blignaut, "both with the technical aspect as well as the practical aspect. We also provide a multi-dimensional service to clients that includes the equipment, training and support. We even provide feedback to users.

"We have a basic desktop model available that can be used for research, and can be adapted so that people can interact with a computer. It will be possible in future to design a device that would be able to operate a wheelchair. We are working on a model incorporated into a pair of glasses which will provide gaze analysis for people in their natural surroundings, for instance when driving a vehicle.

"Up till now, the imported models have been too expensive," he continues. "But with our system, the technology is now within reach for anyone who needs it. This could lead to economic expansion and job creation."

The University of the Free State is the first manufacturer of eye-tracking devices in Africa, and Blignaut hopes that the project will contribute to nation-building and empowerment.

"The biggest advantage is that we now have a local manufacturer providing a quality product with local training and support."

In an eye-tracking device, a tiny infra-red light shines on the eye and causes a reflection which is picked up by a high-resolution camera. Every eye movement causes a change in the reflection, which is then mapped. Infra-red light is not harmful to the eye and is not even noticed. Eye movement is then completely natural.

Based on eye movements, a researcher can study cognitive patterns, driver behaviour, attention spans, even thinking patterns. A disabled person could use their eye-movements to interact with a computer, with future technology (still in development) that would enable that computer to control a wheelchair or operate machinery.

The UFS recently initiated the foundation of an eye-tracking interest group for South Africa (ETSA) and sponsor a biennial-eye tracking conference. Their website can be found at www.eyetrackingsa.co.za.

“Eye tracking is an amazing tool for empowerment and development in Africa, “ says Blignaut, “but it is not used as much as it should be, because it is seen as too expensive. We are trying to bring this technology within the reach of anyone and everyone who needs it.”

Issued by: Lacea Loader

Director: Strategic Communication

Telephone: +27 (0) 51 401 2584

Cell: +27 (0) 83 645 2454

E-mail: news@ufs.ac.za

Fax: +27 (0) 51 444 6393